Audio stream across network to remote Raspberry Pi from Pipewire to Pulseaudio

Ten years ago, I set up Pulseaudio to sending audio streams across the LAN to a remote Raspberry Pi – from my home office’s desk to the livingroom… Still working ten years later: a solid solution.

Alas, Debian switching from Pulseaudio to Pipewire put an end to that. In general, Pipewire is a nice improvement over Pulseaudio but, while Pipewire offers extensive Pulseaudio emulation, its large collection of modules does not include module-tunnel-sink and module-tunnel-source.

So, I migrated the Raspberry Pi 1B with Debian Bookworm from Pulseaudio to Pipewire, and setup module-pipe-tunnel – Pipewire’s native TCP streaming. Alas crackling audio, which I traced to Pipewire saturating the Pi 1B’s tiny CPU and losing frames. Pipewire’s superior sophistication is too heavy for the Pi 1B.

But then, ChatGPT pointed me to another possibility: RTP, a standard protocol for streaming audio. Both Pulseaudio and Pipewire offer module-rtp-send and module-rtp-recv, so RTP is the perfect interoperability protocol – future-proof too. Sooo…

The quick & dirty way takes only three commands:

On the Pulseaudio receiver:

pactl load-module module-rtp-recv latency_msec=50

On the Pipewire emitter:

pactl load-module module-null-sink sink_name=rtp_sink sink_properties="device.description='Remote'" rate=44100 pactl load-module module-rtp-send source=rtp_sink.monitor

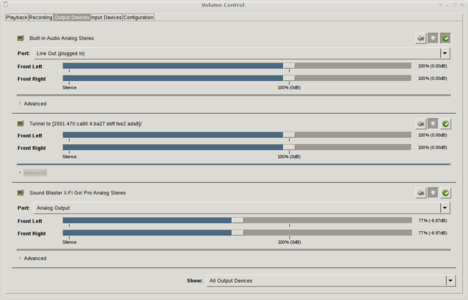

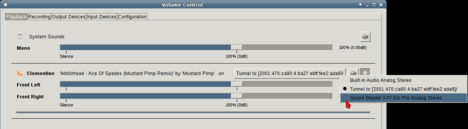

And there you go: launch pavucontrol on the emitter, and you’ll see a new output device called “Remote”, available to sources on the playback tab.

Important: rate=44100 – sample rate must align all along the chain, or you’ll either produce audio artifacts or degrade audio and induce CPU load by resampling.

latency_msec=50 is a compromise between latency and CPU load (how often to process the queue) – that value works for me and my Pi 1B: With the 50 milliseconds latency setting, the Pi 1B handles the received 44 kHz stereo stream at a stable CPU load around 80% – doing anything else simultaneously will push it to the ceiling, drop frames and produce crackling audio.

As you have undoubtedly noticed at this point, module-rtp-send is multicast – which is a little sloppy. So let’s add a specific destination to the second emitter command (replace 192.168.1.7 with your IP of choice):

pactl load-module module-rtp-send source=rtp_sink.monitor destination_ip=192.168.1.7

To undo the setup, run the following:

On the Pulseaudio receiver:

pactl unload-module module-rtp-recv

On the Pipewire emitter:

pactl unload-module module-rtp-send pactl unload-module module-null-sink

Now, let’s make that permanent.

On the emitter – paste the following into ~/.config/systemd/user/pipewire-rtp.service :

[Unit] Description=Start RTP send modules on PipeWire startup After=pipewire.service pipewire-pulse.service [Service] Type=oneshot ExecStart=/usr/bin/pactl load-module module-null-sink sink_name=rtp_sink sink_properties=device.description='Remote' rate=44100 ExecStartPost=/usr/bin/pactl load-module module-rtp-send source=rtp_sink.monitor destination_ip=192.168.1.7 RemainAfterExit=yes [Install] WantedBy=default.target

And of course:

systemctl --user daemon-reload systemctl --user enable pipewire-rtp.service systemctl --user start pipewire-rtp.service

Then same on the receiver: paste the following into ~/.config/systemd/user/pulseaudio-rtp-recv.service :

[Unit] Description=Load PulseAudio RTP receive module After=pulseaudio.service Requires=pulseaudio.service [Service] Type=oneshot ExecStart=/usr/bin/pactl load-module module-rtp-recv latency_msec=50 [Install] WantedBy=default.target

Then the enabling dance:

systemctl --user daemon-reexec systemctl --user daemon-reload systemctl --user enable --now pulseaudio-rtp-recv.service

The receiver being a headless system, triggering that requires the Raspberry Pi’s “autologin” enabled… I don’t like this, but I’ll make an exception for that highly peripheral specialized system. The previous setup used Pulseaudio in System Mode, but one may argue that it is an even worse hack.

Lastly, the only drawback of this solution: a constant 1.5 MB/s stream, whether any audio plays or not. I find it strange because RFC 3389 says “RTP allows discontinuous transmission (silence suppression) on any audio payload format. The receiver can detect silence suppression on the first packet received after the silence by observing that the RTP timestamp is not contiguous with the end of the interval covered by the previous packet even though the RTP sequence number has incremented only by one“. RTP running stateless over UDP (contrary to module-tunnel-source which runs over TCP and establishes sessions) isn’t a problem according to the specification… But Pipewire just doesn’t yet implement silence detection and discontinuous transmission (abbreviated DTX). Also, PipeWire maintains a real-time processing graph where streams are expected to be permanently live, and the concept of “idle stream” is alien – unlike PulseAudio’s “corking” or sink suspension. Anyway an improvement wish (I opened an issue at Pipewire’s home), but meanwhile I don’t mind a 1.5 Mb/s stream of a local network – just pity about the increased electricity consumption.

Joy – my Raspberry Pi 1B is good for a second decade in production !

Addendum: to ensure the emitter only sends 44.1 khz (the receiver doesn’t take 48 khz), add a /home/{YOUR_USERNAME}/.config/pipewire/pipewire.conf.d/rate.conf containing the following:

context.properties = {

default.clock.rate = 44100

default.clock.allowed-rates = [ 44100 48000 ]

}

Also, USB bus enumeration on the Raspberry Pi 1B is slow enough to prevent the USB audio interface coming up before Pulseaudio. I’ve tried a bunch of systemd service waiting loop hacks, but they are unreliable, so I went back to loading the receiver module manually. Maybe I’ll end up retiring the 1B after all…