You have a nice amplifier in a corner of the living-room, tethered to nice loudspeakers. Alas the spot where you want to control the music from is far away – maybe in another corner of the livingroom, maybe in your office room or maybe on another continent… No problem – for about 40€ we’ll let you switch your music to this remote destination just as easily as switching between the headphones and speakers directly connected to your workstation. The average narcissistic audiophile pays more than that for a RCA cable.

So, I grabbed a Raspberry Pi model B (first generation – get them cheap !), a micro-USB power supply from the spares box and a stray SD card (at least 1 GB).

First step is to set it up with an operating system. Since I love Debian, I chose Raspbian.A handy way to install Raspbian quick & easy is raspbian-ua-netinst, a minimal Raspbian unattended installer that gets its packages from the online repositories – it produces a very clean minimal setup out of the box.

So, go to raspbian-ua-netinst’s latest release page and download the .img.xz file – then put it on the SD card using ‘xzcat /path/to/raspbian-ua-netinst-<latest-version-number>.img.xz > /dev/sdX’ for which you may have to ‘apt-get install xz-utils’. Stick that card into the Raspberry Pi, connect the Raspberry Pi to Ethernet on a segment where it can reach the Internet after having been allocated its parameters through DHCP or NDP+RDNSS – and power it up.

Let raspbian-ua-netinst do its thing for about 15 minutes – time enough to find what IP address your Raspberry Pi uses (look at your DHCP server’s leases or just ‘nmap -sP’ the whole IP subnet to find a Raspberry Pi). Then log in over ssh – default root password is raspbian… Use ‘passwd’ to change it right now.

The default install is quite nice, but strangely doesn’t include a couple of important features… So ‘apt-get install raspi-copies-and-fills rng-tools’ – raspi-copies-and-fills improves memory management performance by using a memcpy/memset implementation optimised for the ARM11 used in Raspberry Pi, rng-tools lets your system use the hardware random number generator for better cryptographic performance. To finish setting up the hardware RNG, add bcm2708-rng to /etc/modules.

Also, the default install at the time of this writing uses Debian Wheezy, which contains a Pulseaudio version too old for our purposes – we need Debian Jessie which offers Pulseaudio 5 instead of Pulseaudio 2. And anyway, Jessie is just plain better – so let your /etc/apt/sources.list look like this:

deb http://mirrordirector.raspbian.org/raspbian jessie main firmware contrib non-free rpi

deb http://archive.raspbian.org/raspbian jessie main contrib non-free rpi

Then ‘apt-get update && apt-get -y dist-upgrade && apt-get -y autoremove’… This should take a while.

Now install Pulseaudio the piece of software that will offer a receiving end to your network audio stream: ‘apt-get install pulseaudio’. I assume you have Pulseaudio already set up on the emitter station – your favourite distribution’s default should do fine, as long as it provides Pulseaudio version 5 (use ‘pulseaudio –version’ to check that).

Pulseaudio is primarily designed to cater to desktop usage by integrating with the interactive session of a logged in user – typically under control of the session manager of whatever graphical desktop environment. But we don’t need such complex thing here – a dumb receptor devoid of any extra baggage is what we want. For this we’ll use Pulseaudio’s system mode. Pulseaudio’s documentation repeatedly hammers that running in system mode is a bad idea – “nobody should run it that way, with the exception of very few cases”… Well – here is one of those very few cases.

In their zeal to discourage anyone from running Pulseaudio in system mode, the Pulseaudio maintainers do not ship any startup script in the distribution packages – this ensures that users who don’t know what they are doing don’t stray off the beaten path of orthodox desktop usage and end up on a forum complaining that Pulseaudio doesn’t work. But it also annoys the other users, who actually need Pulseaudio to run at system startup – but that is easily fixable thanks to another creation of Lennart’s gang: all we need is a single file called a systemd unit… I copied one from this guy who also plays with Pulseaudio network streaming (but in a different way – more on that later). This systemd unit was written for Fedora, but it works just as well for Raspbian… Copy this and paste it in /etc/systemd/system/pulseaudio.service :

[Unit]

Description=PulseAudio Daemon

[Install]

WantedBy=multi-user.target

[Service]

Type=simple

PrivateTmp=true

ExecStart=/usr/bin/pulseaudio –system –realtime –disallow-exit –no-cpu-limit

Then ‘systemctl enable pulseaudio’ and ‘systemctl start pulseaudio’ – you now have a properly set up Pulseaudio daemon. Now is a good time to take a moment to consider how much more fastidious the writing of a SysVinit script would have been compared to just dropping this systemd unit in place.

Now let’s see the meat of this article: the actual setup of the audio stream. If you stumbled upon this article, you might have read other methods to the same goal, such as this one or this one. They rely on the server advertising its Pulseaudio network endpoint through Avahi‘s multicast DNS using the module-zeroconf-publish pulseaudio module, which lets the client discover its presence and so that the user can select it as an audio destination after having told paprefs that remote Pulseaudio devices should be available locally. In theory it works well and it probably works well in practice for many people but Avahi’s behaviour may be moody – or, in technical terms, subject to various network interferences that you may or may not be able to debug easily… Struggling with it led me to finding an alternative. By the way, Avahi is another one of Lennart’s babies – so that might be a factor towards Pulseaudio’s strong inclination towards integrating with it.

Discoverability is nice in a dynamic environment but, in spite of my five daughters, my apartment is not that dynamic – my office and the livingroom amplifier won’t be moving anytime soon. So why complicate the system with Avahi ? Can’t we just have a static configuration by declaring a hardcoded link once and for all ? Yes we can, with module-tunnel-sink-new & module-tunnel-source-new !

Module-tunnel-sink-new and module-tunnel-source-new are the reason why we require Pulseaudio 5 – they appeared in this version. They are a reimplementation of module-tunnel-sink, using libpulse instead of reinventing the wheel by using their own implementation of the Pulseaudio protocol. At some point in the future, they will lose their -new suffix and officially replace module-tunnel-{sink,source} – at that moment your setup may break until you rename them in your /etc/pulse configuration to module-tunnel-sink and module-tunnel-source… But that is far in the future – for today it is all about module-tunnel-sink-new and module-tunnel-source-new !

Now let’s configure this ! Remember that we configured the Raspberry Pi’s Pulseaudio daemon in system mode ? That means the relevant configuration is in /etc/pulse/system.pa (not in /etc/pulse/default.pa – leave it alone, it is for the desktop users). So add those two load-module lines to the bottom of /etc/pulse/system.pa – the first one to declare the IP addresses authorized to use the service, the second one to declare the IP address of the client that will use it… Yes – it is a bit redundant, but that is the way (two single load-module lines – don’t mind the spurious carriage return caused by this blog’s insufficient width):

load-module module-native-protocol-tcp auth-ip-acl=127.0.0.1;2001:470:ca99:4:21b:21ff:feaa:99c9

load-module module-tunnel-source-new server=[2001:470:ca99:4:21b:21ff:feaa:99c9]

It is possible to authenticate the client more strictly using a cookie file, but for my domestic purposes I decided that identification by IP address is enough – and lets leave some leeway for my daughters to have fun discovering that, spoof it and stream crap to the livingroom.

Also, as some of you may have noticed, this works with IPv6, but it works well with legacy IPv4 too – in which case the address must not be enclosed in brackets.

Anyway, don’t forget to ‘systemctl restart pulseaudio’ after configuring.

Then on the client side, add this single load-module line to the bottom of /etc/pulse/default.pa (not in /etc/pulse/system.pa – leave it alone, it is for headless endpoints and your client side is most probably an interactive X session). This is one single load-module line – don’t mind the spurious carriage return caused by this blog’s insufficient width:

load-module module-tunnel-sink-new server=[2001:470:ca99:4:ba27:ebff:fee2:ada9] sink_name=MyRemoteRaspberry

Actually I didn’t use sink_name, but I understand you might want to designate your network sink with a friendly nickname rather than an IPv6 address – though why would anyone not find those lovely IPv6 addresses friendly ?

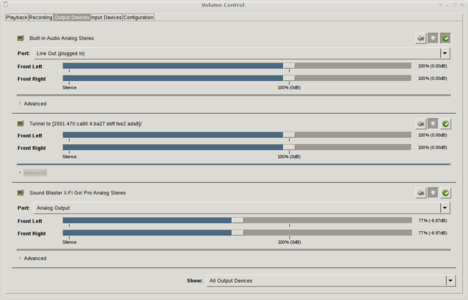

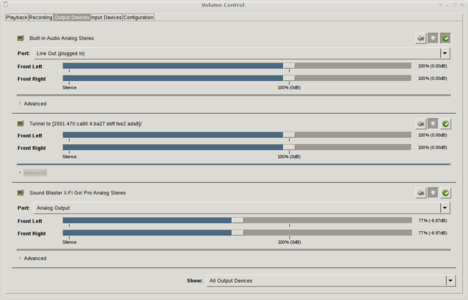

Anyway, log out of your X session, log back in and you’re in business… You have a new output device waiting for you in the Pulseaudio volume control:

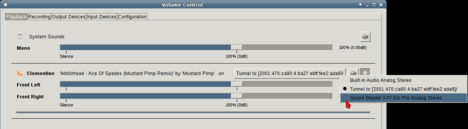

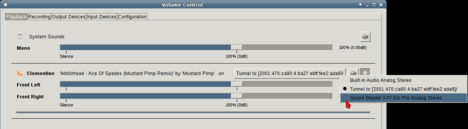

So now, while some of your sound applications (such as the sweet Clementine music player pictured here) plays, you can switch it to the remote device:

That’s all folks – it just works !

While you are joyously listening to remote music, let’s have a word about sound quality. As any sound circuit integrated on a motherboard where it cohabits with a wild bunch of RF emitters, the Raspberry Pi’s sound is bad. The Model B+ claims “better audio – the audio circuit incorporates a dedicated low-noise power supply” but actual testing shows that it is just as bad and sometimes even worse. So I did what I nowadays always do to get decent sound: use a cheap sound adapted on a USB dongle, in the present case a ‘Creative Sound Blaster X-FI Go Pro’ which at 30€ gets you a great bang for the buck.

Luck has it that the Raspberry Pi’s Pulseaudio offers it as the default sink – so I did not have to specify anything in my client configuration. But that may or may not be the case on yours – in which case you must use module-tunnel-sink-new’s sink parameter to tell it which sink to use. Since the Raspberry Pi’s Pulseaudio runs in system mode, you won’t be able to ‘pactl list sinks’ so you’ll have to detour through a run in debug mode to know the name of the sinks available.

Sound quality is also a reason why this method is better than a really long stereo line extension cord whose attenuation would degrade sound noticeably.

Well, that was verbose and long winded – but I hope to have clearly covered everything… If I there is anything you feel I should explain better, please ask questions in the comments !